Value Function Approximation

In the previous post, we introduced TD learning algorithms. At that time, all state/action values were represented by tables. This is inefficient for handling large state or action spaces.

In this post, we will use the function approximation method for TD learning. It is also where artificial neural networks are incorporated into reinforcement learning as function approximators.

Sources:

TD learning with function approximation

With function approximation methods, the goal of TD learning is equivalent to is finding the best

The expectation in

In reinforcement learning (RL), we commonly use the stationary distribution of

The stationary distribution of

Let

Optimization algorithms

The loss function

While we have the objective function, the next step is to optimize it.

To minimize

The true gradient is

The true gradient above involves the calculation of an expectation. We can use the stochastic gradient to replace the true gradient:

In particular, - First, Monte Carlo learning with function approximation: Let

After all, the algorithm becomes

Therefore, we can solve TD learning problems[^1] with the function approximation method.

TD learning of action values based on function approximation

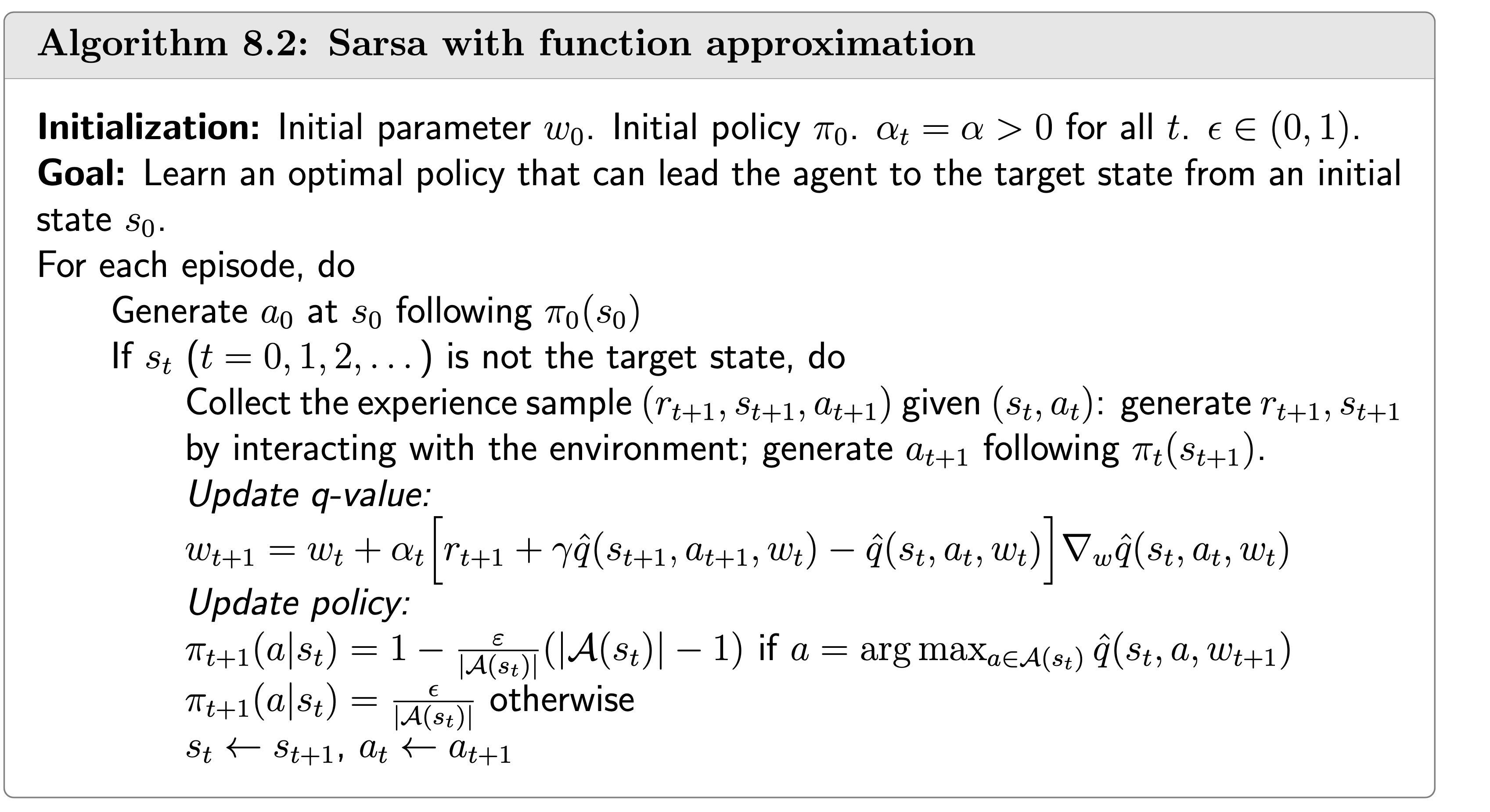

Sarsa with function approximation

Suppose that

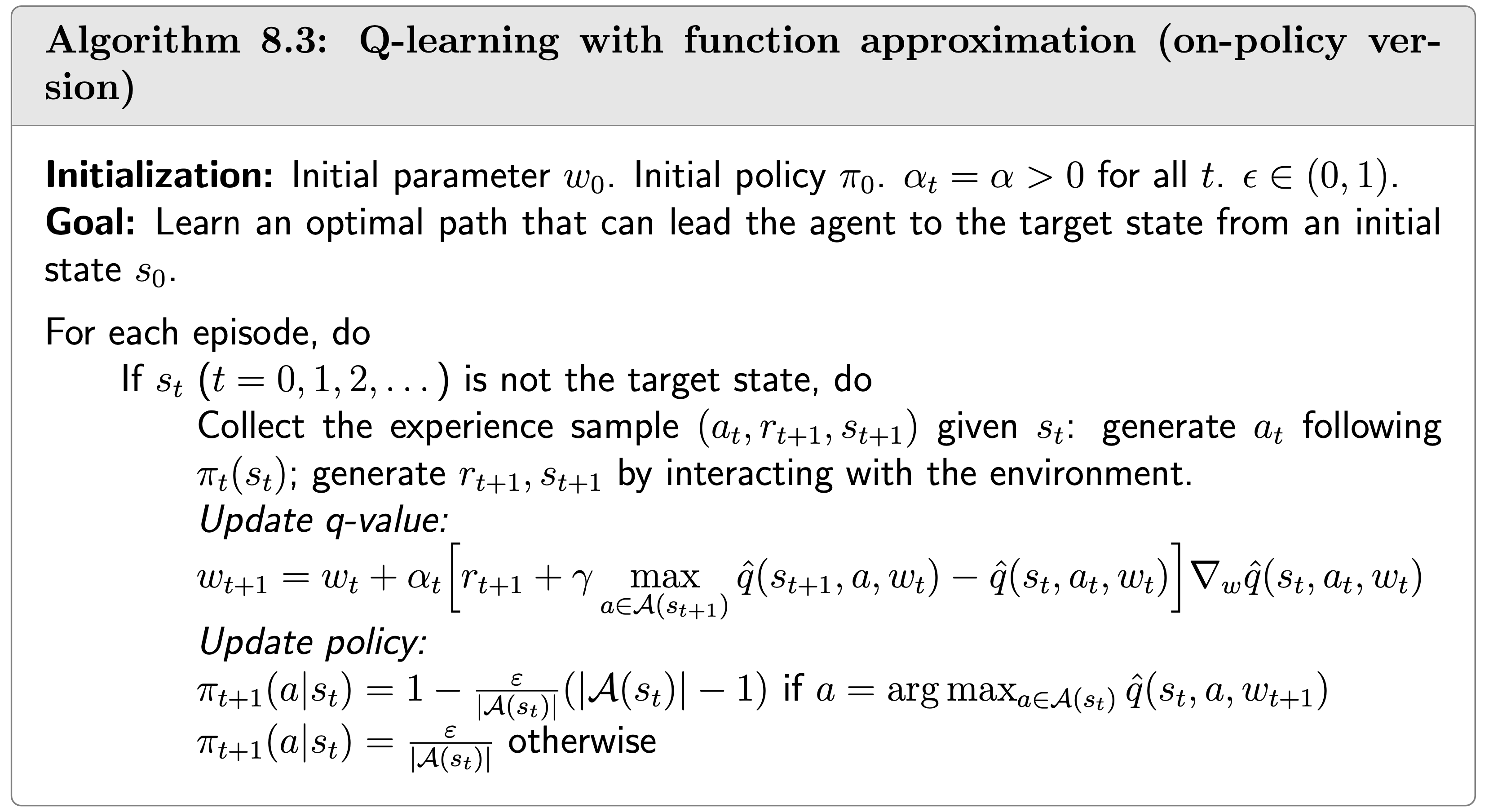

Q-learning with function approximation

Tabular Q-learning can also be extended to the case of function approximation. The update rule is

Here, the function approximator is

NOTE: altough Q-learning with function approximation can be implemented by neural networks. In practice, we choose to use, instead of this method, Deep Q-learning or deep Q-network (DQN). DQN is not Q learning, at least not the specific Q learning algorithm introduced in my post, but it shares the core idea of Q learning.

1 "TD learning problems" refer to the problems aimed to solve by TD learning algorithms, i.e., the state/action value function estimation given a dataset generated by a given policy