Q Learning

This chapter introduces Q learning, a Temporal-Difference (TD) learning method to estimate optimal action values, hen optimal policies. Previouly we have illustrated TD-learning of state values and action values. For these methods, we need to do policy improvement to get optimal policies.

Sources:

Q learning

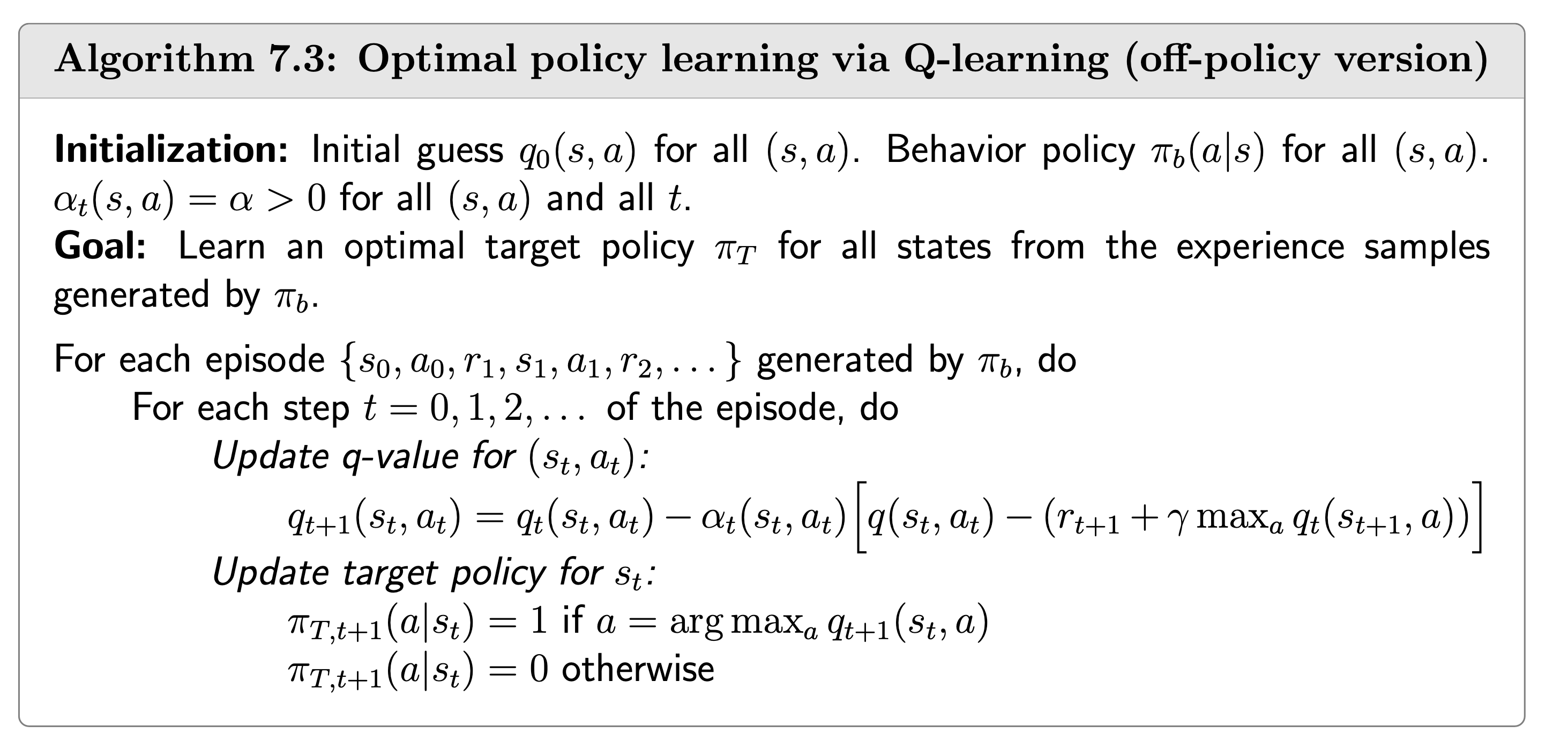

Given the data/experience \(\left\{\left(s_t, a_t, r_{t+1}, s_{t+1}, a_{t+1}\right)\right\}_t\) generated following the any policy \(\pi\), the TD learning algorithm to estimate the optimal action value function of policy \(\pi\), also called Q learning, is: \[ \begin{aligned} q_{t+1}\left(s_t, a_t\right) & =q_t\left(s_t, a_t\right)-\alpha_t\left(s_t, a_t\right)\left[q_t\left(s_t, a_t\right)-\left[r_{t+1}+\gamma \color{red}{\max _{a \in \mathcal{A}(s_{t+1})} q_t\left(s_{t+1}, a\right)}\right]\right] \\ q_{t+1}(s, a) & =q_t(s, a), \quad \forall(s, a) \neq\left(s_t, a_t\right) \end{aligned} \] where \(t=0,1,2, \ldots\)

\(q_t\left(s_t, a_t\right)\) is an estimate of \(q_\pi\left(s_t, a_t\right)\);

\(\alpha_t\left(s_t, a_t\right)\) is the learning rate depending on \(s_t, a_t\).

The action \(a_t\) is called the current action. It's the action from the current state that is actually executed in the environment, and whose Q-value is updated.

The action used in \(\max _{a \in \mathcal{A}(s_{t+1})} q_t\left(s_{t+1}, a\right)\) is called the target action. It has the highest Q-value from the next state, and used to update the current action’s Q value. Later we will see that, in DQN, the approximation of the target action is generated by a "target network", not the "main network" which generates the approximation of \(q_\pi\left(s_t, a_t\right)\).

Q-learning is very similar to Sarsa. They are different only in terms of the TD target: - The TD target in Q-learning is \(\color{red}{r_{t+1}+\gamma \max _{a \in \mathcal{A}(s_{t+1})} q_t\left(s_{t+1}, a\right)}\) - The TD target in Sarsa is \(\color{red}{r_{t+1}+\gamma q_t\left(s_{t+1}, a_{t+1}\right)}\).

Motivation

Its motivation is to solve \[ \color{green}{q(s, a)=\mathbb{E}\left[R_{t+1}+\gamma \max _a q\left(S_{t+1}, a\right) \mid S_t=s, A_t=a\right]}, \quad \forall s, a . \]

This is the Bellman optimality equation expressed in terms of action values.

Comparison with Sarsa and MC learning

Before further studying Q-learning, we first introduce two important concepts: on-policy learning and off-policy learning.

There exist two policies in a TD learning task:

- The behavior policy is used to generate experience samples.

- The target policy is constantly updated toward an optimal policy.

On-policy vs off-policy:

- When the behavior policy is the same as the target policy, such kind of learning is called on-policy.

- When they are different, the learning is called off-policy.

Advantages of off-policy learning: - It can search for optimal policies based on the experience samples generated by any other policies.

Sarsa is on-policy, since it aims to estimate the action value function from a dataset generated by a given policy \(\pi\).

MC learning is on-policy as well since it shares the same goal with Sarsa.

Q learning is off-policy because it solves the optimal action value function from a datadaset generated any policy \(\pi\).